For the first time in my career, I’m considering NOT publishing SEO research, and it makes me very conflicted, writes Cyrus Shepard.

Working on a model to predict if Google might classify a website as “Unhelpful Content.”

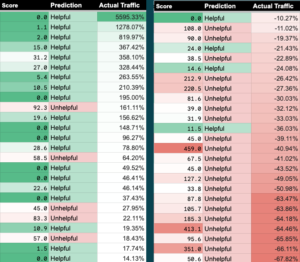

Currently it’s 82% accurate in predicting traffic gains/losses for sites I’m tracking, and I think it can be made even better.

What surprised me is that it appears Google is actually targeting sites employing common SEO practices—a type of “over-optimization,” you might say.

I can’t be positive, but that’s what the data suggests.

Not to be paranoid, but it’s as if Google read our SEO posts over the last few years and then worked to negate some of the tactics that worked.

So do I publish my research to push back against Google’s work, which was a response to SEO work, only to force Google to respond again?

How many 1000s of hours and millions of dollars are wasted on both sides?

After a while, it seems like a pointless circle.

I know it’s always been a back-and-forth between Google and publishers, but at a certain point, it feels like we should be on the same side here.

I’m primarily a researcher.

I try to create good content, and I believe in making the web a better place for everyone.

My job is to help businesses fight for visibility in a sea of sameness, punching above their weight, often against bigger brands and sometimes spammers.

To be honest, I wish there was a way we could help Google create its algorithms in a way that supports creators rather than constantly reverse-engineering them to gain an edge.

I don’t like giving spammers an advantage, but I also don’t know how to do what I do without transparency.

On the other hand, if I don’t publish this research, good websites might be shooting themselves in the foot with outdated SEO advice.