Here’s what I think you need to do to recover your site’s SEO (and why I think so many sites got squashed), wrote Thomas Jepsen.

- I think HCU was in large a defense algorithm against low-quality AI.

We saw a good number of results where sites put out bad AI content, not in the hundreds of pages, but often in tens of thousands.

HCU was strong enough that it would demolish low-authority sites.

In my research, I’ve seen tons of sites fall 90% or more.

Google needed some level of protection against these sites going up.

Which leads me to what I believe HCU looks at.

- Google has repeatedly told us to make websites for users, not for machines.

In reality, we didn’t.

SEOs saw that you could usually squeeze out more traffic by being fairly aggressive in your optimization…

And there’s one thing I specifically think Google went after…

Google’s mission has always been to serve up the best content, but they had kept rewarding optimized SEO content…

Content where SEOs squeeze keywords into the headings to answer very specific queries.

In all honesty, I feel SEOs refused to listen because it worked.

Across both own projects and other sites, I’ve consistently seen this trend when significantly lowering the optimization of headings…

Individual pages have started to rise immensely.

Google doesn’t want hyper-optimized pages, they want the best content…

And they just decided to take extreme action against people creating content for search engines.

Google would rather surface high-quality hobbyist content (arguably UGC, forums, etc), than someone who has found out he can make money from affiliate reviews.

But content sites… Still work…

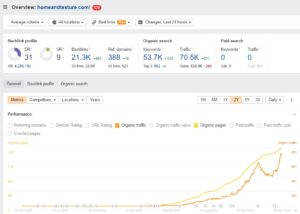

Is a content site.

But practically all content sites I’ve seen continue to succeed have fairly deoptimized H2s and H3s (seen relative to overlap in the H1.

However, I think being great at other components increase…

The optimization threshold you can get away with.

As is the case in the site screenshotted…

They have…

— Many divs — Tables — Nicely laid out

However, it’s THE only low-ish authority content site I’ve been able to find that has gotten away with it.

Getting back to it, I profoundly believe “real businesses” were spared for a couple of reasons.

- Real businesses were spared in the HCU (mainly) because their proportion of super-optimized articles is rather low compared to their overall index.

But they were also spared because they have so many other things going on (I wouldn’t necessarily try to replicate it for your average content site)…

Remember I said I believe the level of optimization you can get away with depends on how many things you do right.

These businesses sometimes get brand searches that you won’t get as an average content site, but I’ve also shared examples of content sites that don’t that.

The site listed is homeandtexture.com and it has seen tons of success but just doesn’t aggressively optimize headings:

… & again, most content sites I’ve seen succeed shares this trait.

If you’re an e-commerce with 300 blogs but an otherwise not…

overly-optimized site with 3k indexed pages, the hyper-optimized part makes up a much smaller fraction.

The ball’s in your court.